|

I am currently a fifth-year Ph.D. student at the NLPR, Institute of Automation, Chinese Academy of Sciences (CASIA), supervised by Prof. Tieniu Tan and Prof. Zhaoxiang Zhang. Prior to that, I obtained my Bachelor's degree in Automation from the Department of Automation, Tsinghua University in 2020. Additionally, I have interned at TuSimple. My research interests involves computer vision, 3D perceptions, video generation models and driving simulation. I am currently exploring fully generative driving simulation. |

|

|

|

|

* indicates equal contribution |

|

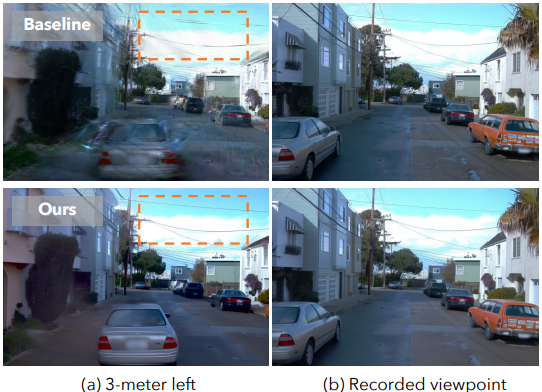

Lue Fan, Hao Zhang, Qitai Wang, Hongsheng Li, Zhaoxiang Zhang CVPR 2025 [paper] [Page] After FreeVS we propose FreeSim, a generation-reconstruction hybrid method for free-viewpoint camera simulation, taking the best of two worlds! |

|

Qitai Wang, Lue Fan, Yuqi Wang, Yuntao Chen, Zhaoxiang Zhang ICLR, 2025 [paper] [Page] [code] FreeVS is the first method that supports high-quality generative view synthesis on free driving trajectory. A crucial step towards achieving generative driving simulation. |

|

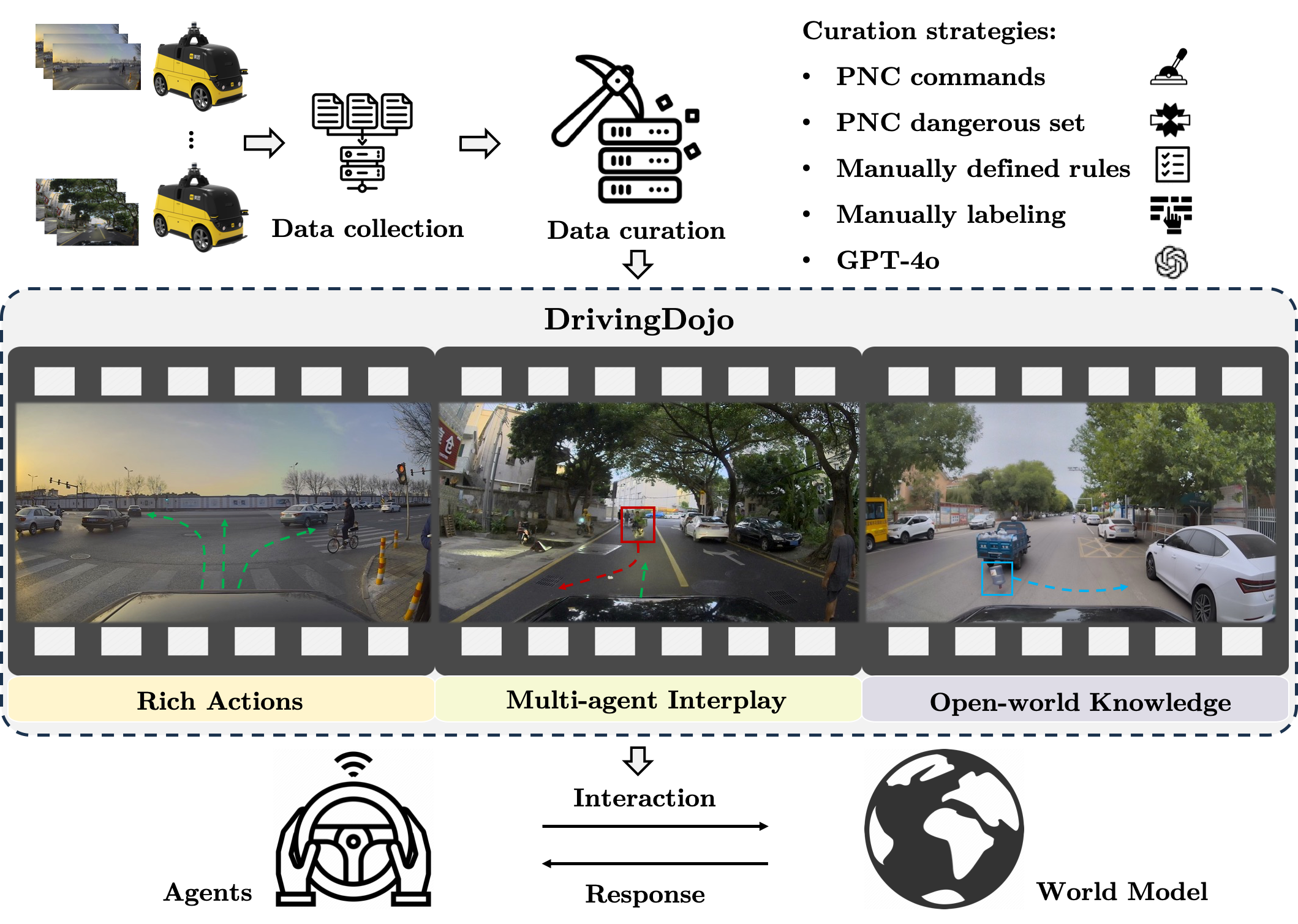

Yuqi Wang*, Ke Cheng*, Jiawei He*, Qitai Wang*, Hengchen Dai, Yuntao Chen, Fei Xia, Zhaoxiang Zhang NeurIPS, 2024, D&B Track [paper] [Page] [code] DrivingDojo dataset features video clips with a complete set of driving maneuvers, diverse multi-agent interplay, and rich open-world driving knowledge, laying a stepping stone for future world model development. |

|

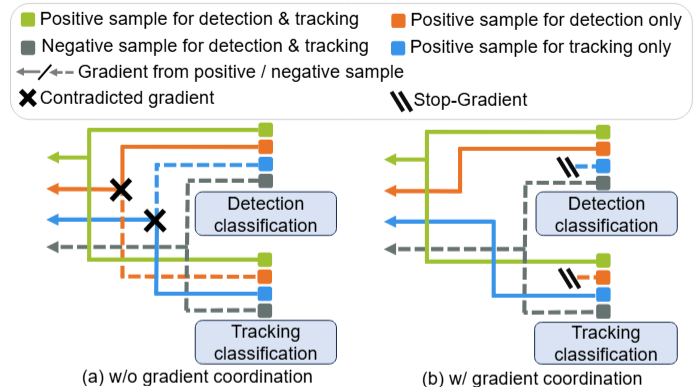

Qitai Wang, Jiawei He, Yuntao Chen, Zhaoxiang Zhang ECCV, 2024 [paper] We have completely resolved the challenge where the perception performance of end-to-end multi-object tracking was inferior to that of standalone detectors, enabling lossless unification of detection and tracking tasks. |

|

Qitai Wang, Yuntao Chen, Zhaoxiang Zhang TPAMI [paper] We propose the uncertain representation of 3D objects to meet the indeterminacy of localizing objects in images. |

|

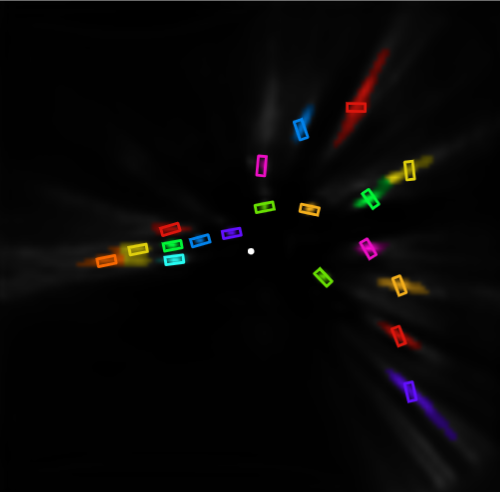

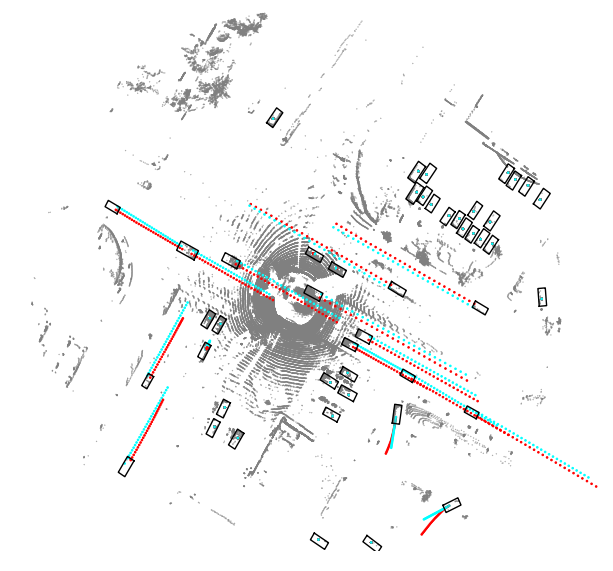

Qitai Wang, Yuntao Chen, Ziqi Pang, Naiyan Wang, Zhaoxiang Zhang arxiv [paper] [code] Still the Best, Fastest, Simplest LiDAR-based 3D multi-object tracker so far. |

|

|

© Qitai Wang | Last updated: May 12, 2025